One complication in using the NAG C Library from .NET is callback functions which, in C, have arrays in their parameter lists. Take for example the optimization algorithm e04nfc(nag_opt_qp) for quadratic programming problems. This NAG function requires a callback function qphess of the following C prototype:

void qphess(Integer n, Integer jthcol, const double h[], Integer tdh,

const double x[], double hx[], Nag_Comm *comm);

In C# the corresponding delegate is

public delegate void NAG_E04NFC_QPHESS (int n, int jthcol,

IntPtr h_ptr, int tdh, IntPtr x_ptr, [In, Out] IntPtr hx,

ref CommStruct comm);

If you follow the C example program for nag_opt_qp as well as the style of the NAG C# examples you will write something like this for qphess in C#:

static void qphess0(int n, int jthcol, IntPtr h_ptr, int tdh,

IntPtr x_ptr, [In, Out] IntPtr hx_ptr,

ref CommStruct comm)

{

double[] xloc = new double[n];

double[] hloc = new double[n * n];

double[] hxloc = new double[n];

Marshal.Copy(h_ptr, hloc, 0, n * n);

Marshal.Copy(x_ptr, xloc, 0, n);

for (int i = 0; i < n; ++i) hxloc[i] = 0;

for (int i = 0; i < n; ++i)

for (int j = 0; j < n; ++j)

hxloc[i] += hloc[i * n + j] * xloc[j];

Marshal.Copy(hxloc, 0, hx_ptr, n);

}

Marshaling can be fairly expensive, and in some cases it may be best to avoid it. The cost is trivial for the small example programs NAG provides, but it quickly mounts with increasing problem size. For n of 1000 and this version of qphess, nag_opt_qp takes about 7 seconds to solve a typical problem on my laptop.

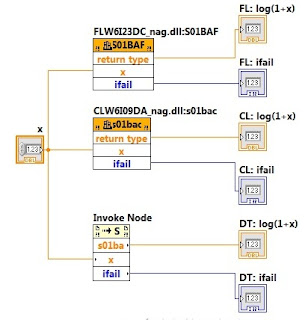

How else might we write qphess? Well, if we declare f06pac(dgemv) in the right way:

[DllImport("CLW6I09DA_mkl")]

public static extern void f06pac( MatrixTranspose trans, int m,

int n, double alpha, IntPtr a, int tda, IntPtr x, int incx,

double beta, [In, Out] IntPtr y, int incy);

we can avoid Marshaling

static void qphess1(int n, int jthcol, IntPtr h_ptr, int tdh,

IntPtr x_ptr, [In, Out] IntPtr hx_ptr,

ref CommStruct comm)

{

NagFunctions.f06pac(MatrixTranspose.NoTranspose, n, n,

1.0, h_ptr, tdh, x_ptr, 1, 0.0, hx_ptr, 1);

}

Written this way, e04nfc takes only 0.62 seconds to solve my problem.

However, when I did this I also avoided the cost of serial C# loops and bound-checking – not to mention destroyed any ease of calling f06pac elsewhere. Alternatively, we could use an unsafe block to avoid Marshaling:

static void qphess2(int n, int jthcol, IntPtr h_ptr, int tdh,

IntPtr x_ptr, [In, Out] IntPtr hx_ptr,

ref CommStruct comm)

{

unsafe

{

double* h_ptr_loc = (double*)h_ptr;

double* hx_ptr_loc = (double*)hx_ptr;

double* x_ptr_loc = (double*)x_ptr;

for (int i = 0; i < n; ++i) hx_ptr_loc[i] = 0;

for (int i = 0; i < n; ++i)

for (int j = 0; j < n; ++j)

hx_ptr_loc[i] += h_ptr_loc[i * n + j] * x_ptr_loc[j];

}

}

Written this way, e04nfc takes 3.5 seconds to solve my problem. This suggests a cost of 3.5 seconds for Marshaling. Since nag_opt_qp makes about 1000 calls to qphess to solve my test problem, Marshaling here comes with a price of 0.44 milliseconds per megabyte.

As a check, we may write qphess a fourth way. With the help of an overload:

public static void f06pac(MatrixTranspose trans, int m, int n,

double alpha, double[] a, int tda,

double[] x, int incx, double beta,

[In, Out] double[] y, int incy)

{

unsafe

{

fixed (double* a_ptr = &a[0])

fixed (double* y_ptr = &y[0])

fixed (double* x_ptr = &x[0])

{

f06pac(trans, m, n, alpha, (IntPtr)a_ptr, tda,

(IntPtr)x_ptr, incx,

beta, (IntPtr)y_ptr, incy);

}

}

}

we may write qphess like this:

static void qphess3(int n, int jthcol, IntPtr h_ptr, int tdh,

IntPtr x_ptr, [In, Out] IntPtr hx_ptr,

ref CommStruct comm)

{

double[] xloc = new double[n];

double[] hloc = new double[n * n];

double[] hxloc = new double[n];

Marshal.Copy(h_ptr, hloc, 0, n * n);

Marshal.Copy(x_ptr, xloc, 0, n);

NagFunctions.f06pac(MatrixTranspose.NoTranspose, n, n,

1.0, hloc, n, xloc, 1, 0.0, hxloc, 1);

Marshal.Copy(hxloc, 0, hx_ptr, n);

}

We’ve avoided the C# loops but have used Marshaling. In this case, e04nfc solves my problem in about 4.2 seconds, approximately confirming my previous estimate of 0.44 milliseconds per megabyte.

The inverse gives a memory bandwidth of 2.3 GB/s, which is between my laptop’s Passmark memory test scores for Read(Cached) and Write. Microsoft seems to be doing as well as one could reasonably ask here with Marshal.Copy.

Aside: The time to solution using a purely C# version of qphess can be reduced to about 0.93 seconds by parallelizing the outer loop

static void qphess4(int n, int jthcol, IntPtr h_ptr, int tdh,

IntPtr x_ptr, [In, Out] IntPtr hx_ptr,

ref CommStruct comm)

{

unsafe

{

double* h_ptr_loc = (double*)h_ptr;

double* hx_ptr_loc = (double*)hx_ptr;

double* x_ptr_loc = (double*)x_ptr;

Parallel.For(0, n, i =>

{

hx_ptr_loc[i] = 0;

for (int j = 0; j < n; ++j)

hx_ptr_loc[i] += h_ptr_loc[i * n + j] * x_ptr_loc[j];

}

);

}

}